I guess it’s because you have no apps using prostgresql, but i am not totally sure.

I have migrated my test instance with only one minor issue : php7.3-fpm was dead and I had to restart it manually (without any issue then… ) Not a lot of apps on it but all perfectly working.

Thanks for your hard work !

For me both php7.3-fpm and rspamd were dead.

Also yunohost service status php7.3-fpm shows that start_on_boot: disabled Should I manually enable the service to start on boot?

Edit: restarting rspamd does not fix it

Umm, this could be important for users running on an arm system

admin@pi4:~ $ /usr/bin/rspamd -c /etc/rspamd/rspamd.conf -f

Segmentation fault

related issue (?) [BUG] rspamd segfaults on startup on aarch64 · Issue #3563 · rspamd/rspamd · GitHub

Backtrace:

Reading symbols from /usr/bin/rspamd...

Reading symbols from /usr/lib/debug/.build-id/ad/71410657fa255a2eabe3ae72634dea02dc6caf.debug...

"/etc/rspamd/rspamd.conf" is not a core dump: file format not recognized

(gdb) set pagination 0

(gdb) run

Starting program: /usr/bin/rspamd

[Thread debugging using libthread_db enabled]

Using host libthread_db library "/lib/aarch64-linux-gnu/libthread_db.so.1".

Program received signal SIGSEGV, Segmentation fault.

lpeg_allocate_mem_low (sz=sz@entry=512) at ./contrib/lua-lpeg/lpvm.c:77

77 ./contrib/lua-lpeg/lpvm.c: No such file or directory.

(gdb) bt

#0 lpeg_allocate_mem_low (sz=sz@entry=512) at ./contrib/lua-lpeg/lpvm.c:77

#1 0x0000007ff7cd6148 in lp_match (L=0x7ff4c21378) at ./contrib/lua-lpeg/lptree.c:1153

#2 0x0000007ff7a49354 in ?? () from /lib/aarch64-linux-gnu/libluajit-5.1.so.2

#3 0x0000007ff7a9a2b0 in lua_pcall () from /lib/aarch64-linux-gnu/libluajit-5.1.so.2

#4 0x0000007ff7be2b08 in rspamd_rcl_jinja_handler (parser=<optimized out>, source=<optimized out>, source_len=2523, destination=0x7fffffdfa8, dest_len=0x7fffffdfb0, user_data=0x7ff2c028e0) at ./src/libserver/cfg_rcl.c:3741

#5 0x0000007ff7db3904 in ucl_parser_add_chunk_full (parse_type=UCL_PARSE_UCL, strat=UCL_DUPLICATE_APPEND, priority=0, len=2523, data=0x7ff7b0f000 "# System V init adopted top level configuration\n\n# Please don't modify this file as your changes might be overwritten with\n# the next update.\n#\n# You can modify '$LOCAL_CONFDIR/rspamd.conf.local.overr"..., parser=0x7ff44c0c00) at ./contrib/libucl/ucl_parser.c:2889

#6 ucl_parser_add_chunk_full (parser=0x7ff44c0c00, data=<optimized out>, len=<optimized out>, priority=0, strat=UCL_DUPLICATE_APPEND, parse_type=UCL_PARSE_UCL) at ./contrib/libucl/ucl_parser.c:2856

#7 0x0000007ff7be69c4 in rspamd_config_parse_ucl (cfg=cfg@entry=0x7ff2c028e0, filename=filename@entry=0x555556f438 "/etc/rspamd/rspamd.conf", vars=vars@entry=0x0, inc_trace=inc_trace@entry=0x0, trace_data=trace_data@entry=0x0, skip_jinja=skip_jinja@entry=0, err=err@entry=0x7ffffff128) at ./src/libserver/cfg_rcl.c:3911

#8 0x0000007ff7bea76c in rspamd_config_read (cfg=cfg@entry=0x7ff2c028e0, filename=0x555556f438 "/etc/rspamd/rspamd.conf", logger_fin=logger_fin@entry=0x5555566414 <config_logger>, logger_ud=logger_ud@entry=0x7ff4010000, vars=0x0, skip_jinja=0, lua_env=0x0) at ./src/libserver/cfg_rcl.c:3950

#9 0x0000005555567424 in load_rspamd_config (rspamd_main=rspamd_main@entry=0x7ff4010000, cfg=0x7ff2c028e0, opts=opts@entry=(RSPAMD_CONFIG_INIT_URL | RSPAMD_CONFIG_INIT_LIBS | RSPAMD_CONFIG_INIT_SYMCACHE | RSPAMD_CONFIG_INIT_VALIDATE | RSPAMD_CONFIG_INIT_PRELOAD_MAPS | RSPAMD_CONFIG_INIT_POST_LOAD_LUA), reload=reload@entry=0, init_modules=1) at ./src/rspamd.c:946

#10 0x000000555555b3cc in main (argc=<optimized out>, argv=<optimized out>, env=<optimized out>) at ./src/rspamd.c:1425

(gdb)

Edit: This is an upstream bug that was missed in the bullseye package for arm64 (possibly for some other architectures as well)

Until today there was nobody noitced this. I have replied to a similar issue with the ppc64el architecture - #1011305 - please replace (build) dependency luajit with lua on ppc64el - Debian Bug report logs

One possible fix is to build the package and distribute it through the yunohost repo

When i ran migration to Yunohost 11, Matrix Synapse cannot start due package dependencies were not updated. I restored it from my last backup and now starts without issues. This workaround may work for other python apps. but i don’t tested it yet

For every python app (but it was in the firsts version of YNH 11) I had to “force update” them all.

I prefer this over a restoration because it uses the actual data, not those from a backup.

I use a self-hosted yunohost system on a local IP (on a VM on proxmox server). I have installed the following applications for testing:

- Dokuwiki

- Etherpad Mypads

- Lstu

- Matomo

- PrettyNoemie CMS

- Rainloop

- Searx

- WordPress

- Zerobin

They work for one year.

After the launch of :

curl https://install.yunohost.org/switchtoTesting | bash

I had to run the system update to see the migration appear.

But my “/” partition was smaller than 1 GB because of node (20 versions installed) (see How to free up space? node uses more than 8 GB space - #12 by jorge). I cleaned the old versions.

But the message of was so hidden in the logs of migration. It would be an alert more visible.

Then after the end of the migration, all services worked except lstu.

I restarted the compilation of the Carton dependencies and it started again.

sudo cpanm Carton

cd /var/www/lstu/

sudo rm -rf local/

sudo carton install --deployment --without=sqlite --without=mysql

sudo systemctl restart lstu.service

So everything is working on my side.

Thanks for everything!

Hello,

I upgraded successfully to Debian 11 ![]() Thanks to all dev

Thanks to all dev ![]()

Here information about the context and maybe to help you to improve the migration.

I had this issue described in #112 right after switching to testing no upgrade was performed.

The solution was to use yunohost tools upgrade as suggested by @ljf in #113

So I would suggest to add this to the swithToTesting script.

After the upgrade, I had to force upgrade for the python based apps:

- synapse_ynh

- mautrix_signal_ynh

Overall, everything works well :

- Nextcloud

- Rainloop (in transition to SnappyMail)

- Roundcube

- Jirafeau

- Wordpress

- Custom webapp

- Bitwarden (Vaultwarden)

- TTRSS

- DokuWiki

- Element

- Synapse (Matrix)

- Mautrix Signal, Whatsapp

- Grafana

- Netdata

- phpLDAPadmni

- phpMyAdmni

Thanks!

So far so good… In my case, Nextcloud, Onlyoffice, work perfect… but the migration stopped 'cos dnsmasq died in the process…

Right now I need to launch the daemon manually… the systemctl service for dnsmasq do not work… anyone had the same problem as I saw… Any ideas how to solve it…???

journalctl -n70 -u dnsmasq

– Boot 4dc1d4c649ad48fab24849de9847adfb –

jun 26 17:39:35 domain.dlt systemd[1]: Starting dnsmasq - A lightweight DHCP and caching DNS server…

jun 26 17:39:36 domain.dlt dnsmasq[547]: Usage: /etc/init.d/dnsmasq {start|stop|restart|force-reload|dump-stats|status}

jun 26 17:39:36 domain.dlt systemd[1]: dnsmasq.service: Control process exited, code=exited, status=3/NOTIMPLEMENTED

jun 26 17:39:36 domain.dlt systemd[1]: dnsmasq.service: Failed with result ‘exit-code’.

jun 26 17:39:36 domain.dlt systemd[1]: Failed to start dnsmasq - A lightweight DHCP and caching DNS server.

of course… my domain is not domain.dlt… I change the text to hide the real domain…

I had an issue with borg after the migration to bullseye and found a workaround:

When I run the command from the instructions nothing appears in the webadmin interface.

The following output is the log from running the command “curl https://install.yunohost.org/switchtoTesting | bash”.

Can I somehow manually force an update? Why is it not updating?

So I’m running into an issue that when I add an email alias in the user portal and click “OK” it goes to a 500 internal server error page. I have a feeling that this might be because I have the web api deactivated though. It could also be because I have around 100 aliases. Are there any specific logs that would help?

Everything seems to work except

postgresql

https://paste.yunohost.org/koqajofuge

Any solutions?

Thankyou @Gofannon , I wish your post was at the top of this thread. I had exactly the same issue as you, trying to upgrade from Debian 10/YunoHost 4.3. With the help of your concise instructions, I have been able to upgrade successfully!

I was able to get everything working but I cant upgrade to the latest version of Misskey for Bullseye on YunoHost

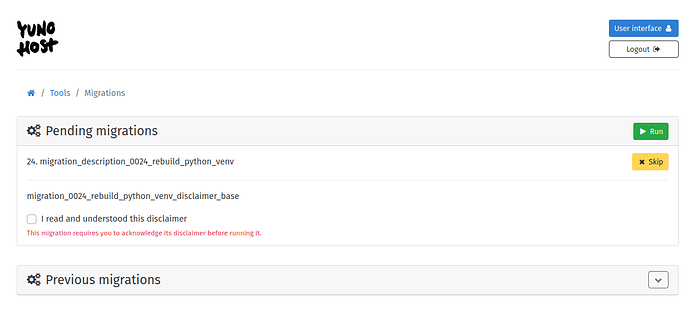

Update to version 11.0.9 done today, but now I have a pending migration with a message to validate : migration_0024_rebuild_python_venv_disclaimer_base, but I can not understand what it means ![]()

Edit after a search in the code, it means :

“Following the upgrade to Debian Bullseye, some Python applications needs to be partially rebuilt to get converted to the new Python version shipped in Debian (in technical terms: what’s called the ‘virtualenv’ needs to be recreated). In the meantime, those Python applications may not work. YunoHost can attempt to rebuild the virtualenv for some of those, as detailed below. For other apps, or if the rebuild attempt fails, you will need to manually force an upgrade for those apps.”

Interesing… I have some Python based projects…

In my case i always delete a existing venv and recreate them. Because of: Python package: Upgrate/Restore failes on CI

So my apps must be just “forced upgrade” to fix everything… Maybe that’s a solution for all apps?

I’ve done the Buster->Bullseye migration about a week ago and I’m very happy with it overall, especially as I have a couple of non-YunoHost sites that I am hosting.

Two of those have virtualenv python backends (one based on Django, the other based on Flask), so I too have the migration_0024_rebuild_python_venv_disclaimer_base migration to do.

My Tools->Migrations page looks like this:

I suspect there is supposed to be a disclaimer showing that I can read and understand, but I can’t see it. Is this a bug?

Thanks to all for a fabulous project!