The remainder of this thread shall be named

how to break your Yunohost

Ok ok… Things didn’t get more broken than they were… yet.

Before continuing from here, I made a full disk image of the server.

I didn’t know how to do that on the VPS-infrastructure (SolusVM) I’m renting. There is a helpful howto over at lowendspririt.com

Migration of Debian from Buster to Bullseye has completed (apt-get dist-upgrade), but Yunohost got removed. Upon reinstall I skipped post-install per Aleks’ instructions.

Yunohost partly works. Yunohost-api does not run; there is no entry in /etc/init.d for either yunohost or yunohost-api

Yunohost recognizes the migration to Bullseye did not complete satisfactory, and --force-rerun does not have the intended (by me) effect:

# yunohost tools migrations run 0021_migrate_to_bullseye

Info: Running migration 0021_migrate_to_bullseye...

Error: Migration 0021_migrate_to_bullseye did not complete, aborting. Error: The current Debian distribution is not Buster! If you already ran the Buster->Bullseye migration, then this error is symptomatic of the fact that the migration procedure was not 100% succesful (otherwise YunoHost would have flagged it as completed). It is recommended to investigate what happened with the support team, who will need the **full** log of the `migration, which can be found in Tools > Logs in the webadmin.

Info: The operation 'Run migrations' could not be completed. Please share the full log of this operation using the command 'yunohost log share 20220927-214414-tools_migrations_migrate_forward' to get help

# yunohost tools migrations run 0021_migrate_to_bullseye --force-rerun

Error: Those migrations are still pending, so cannot be run again: 0021_migrate_to_bullseye

I’ll try skipping this one, and continuing from there:

# yunohost tools migrations run 0021_migrate_to_bullseye --skip

Warning: Skipping migration 0021_migrate_to_bullseye...

# yunohost tools migrations run --accept-disclaimer

Info: Running migration 0024_rebuild_python_venv...

Info: Now attempting to rebuild the Python virtualenv for `borg-env`

Success! Migration 0024_rebuild_python_venv completed

That was fast!

It doesn’t repair yunohost-api. I hope a reinstall (and perhaps subsequent reboot) will:

# apt reinstall yunohost yunohost-admin

# service yuno (tab tab tab...)

yunomdns yunoprompt

These are new for me. Does yunohost-api still exist?

# yuno (tab tab ...)

yunohost yunohost-api

# yunohost-api

Yeah, that does run, web GUI is also running now.

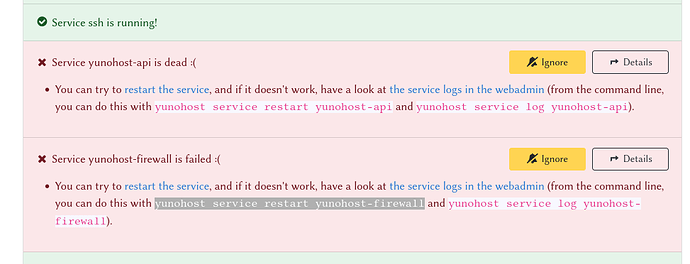

Not all is happy yet,

Up next: have a look in another Yunohost how the start-script works, and copy it over.

Of course, Yunohost to the rescue: the next section in diagnosis hands me the exact commands to regenerate the scripts

On reboot, no direct luck though. More tomorrow!

It is tomorrow! On another (almost similar) Yunohost the migration ran without any problem. All services are active; a single warning (relating to DNS) in diagnosis.

That particular Yunohost does not have any yunohost* entry in /etc/init.d either, but it does have some files in /etc/systemd/system/

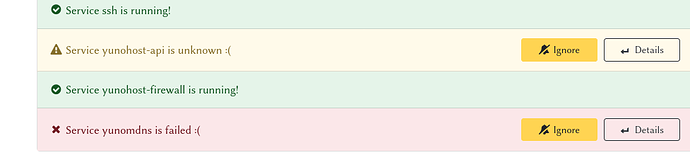

Over at this Yunohost, yunohost-api is masked, /etc/systemd/system/yunohost-api.service is a symlink to /dev/null. systemctl unmask yunohost-api removes the symlink, but does not create anything to enable yunohost-api. I can now enter the web GUI again after starting yunohost-api on the CLI via SSH.

For yunohost-firewall I created the .service-file copied the contents of /etc/systemd/system/yunohost-firewall.service from the other Yunohost to the file just created on this host.

# vi /etc/systemd/system/yunohost-firewall.service

"yunohost-firewall.service" [New File] 15 lines, 295 bytes written

# systemctl enable yunohost-firewall

Removed /etc/systemd/system/multi-user.target.wants/yunohost-firewall.service.

Created symlink /etc/systemd/system/multi-user.target.wants/yunohost-firewall.service → /etc/systemd/system/yunohost-firewall.service.

# systemctl start yunohost-firewall

… with some success:

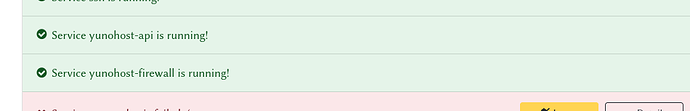

After doing the same for yunohost-api.service, killing the manual yunohost-api process and starting the service, that one is in green as well.

Maybe there is a line of difference after copy/paste, system configurations tells me those two service files divert from the default. I forced a rewrite of the config file, it seems OK now.

The only service I can’t find is yunomdns, which I think I can live without for the moment.

As far as apps is concerned, Matrix/Synapse is having difficulties (needs troubleshooting; it seems totally gone…), but the others are all OK.

Wohoo! That was just so easy!

# yunohost backup restore synapse-pre-upgrade1

Warning: YunoHost is already installed

Do you really want to restore an already installed system? [y/N]: y

Info: Preparing archive for restoration...

Info: Restoring synapse...

Info: [....................] > Loading settings...

Info: [....................] > Validating restoration parameters...

Info: [+++++++.............] > Reinstalling dependencies...

Warning: Creating new PostgreSQL cluster 13/main ...

Warning: /usr/lib/postgresql/13/bin/initdb -D /var/lib/postgresql/13/main --auth-local peer --auth-host md5

Warning: The files belonging to this database system will be owned by user "postgres".

Warning: This user must also own the server process.

Warning: The database cluster will be initialized with locale "en_US.UTF-8".

Warning: The default database encoding has accordingly been set to "UTF8".

Warning: The default text search configuration will be set to "english".

Warning: Data page checksums are disabled.

Warning: fixing permissions on existing directory /var/lib/postgresql/13/main ... ok

Warning: creating subdirectories ... ok

Warning: selecting dynamic shared memory implementation ... posix

Warning: selecting default max_connections ... 100

Warning: selecting default shared_buffers ... 128MB

Warning: selecting default time zone ... Europe/London

Warning: creating configuration files ... ok

Warning: running bootstrap script ... ok

Warning: performing post-bootstrap initialization ... ok

Warning: syncing data to disk ... ok

Warning: Success. You can now start the database server using:

Warning: pg_ctlcluster 13 main start

Warning: Ver Cluster Port Status Owner Data directory Log file

Warning: 13 main 5433 down postgres /var/lib/postgresql/13/main /var/log/postgresql/postgresql-13-main.log

Warning: update-alternatives: using /usr/share/postgresql/13/man/man1/postmaster.1.gz to provide /usr/share/man/man1/postmaster.1.gz (postmaster.1.gz) in auto mode

Warning: I: Creating /var/lib/turn/turndb from /usr/share/coturn/schema.sql

Warning: Building PostgreSQL dictionaries from installed myspell/hunspell packages...

Warning: Removing obsolete dictionary files:

Info: [#######+............] > Recreating the dedicated system user...

Info: [########+...........] > Restoring directory and configuration...

Info: [#########...........] > Check for source up to date...

Info: '/opt/yunohost/matrix-synapse/lib64' wasn't deleted because it doesn't exist.

Info: '/opt/yunohost/matrix-synapse/.rustup' wasn't deleted because it doesn't exist.

Info: '/opt/yunohost/matrix-synapse/.cargo' wasn't deleted because it doesn't exist.

Info: [#########+..........] > Reload fail2ban...

Info: [##########+.........] > Restoring the PostgreSQL database...

Info: [###########+........] > Enable systemd services

Info: [############++++....] > Creating a dh file...

Info: [################++..] > Reconfiguring coturn...

Info: [##################..] > Configuring log rotation...

Info: [##################+.] > Configuring file permission...

Info: [###################.] > Restarting synapse services...

Info: The service matrix-synapse has correctly executed the action restart.

Info: [###################.] > Reloading nginx web server...

Info: [####################] > Restoration completed for synapse

Success! Restoration completed

apps:

synapse: Success

system: